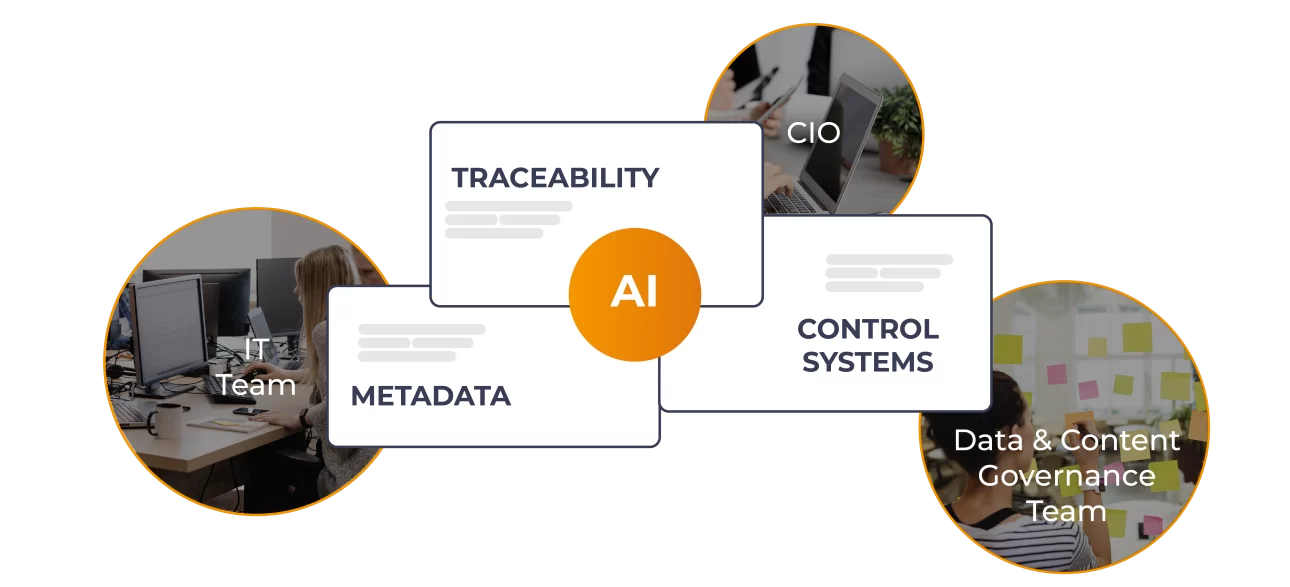

The AI Act is the European law that regulates the use of artificial intelligence by risk level. It establishes what is prohibited, when more stringent requirements are needed, and introduces transparency obligations for content generated or modified by AI. It primarily concerns those who build, integrate, and govern digital platforms and processes in companies: IT teams, CIOs, and data & content governance managers. For them, the most important operational aspects are the traceability of AI-generated content, the quality of metadata, and a readable control system that documents how AI is used.

The law is already in force, but now the more operational rules come into play: informing and labeling AI-generated content, making its provenance traceable with consistent metadata, consciously choosing general purpose models (GPAI), and maintaining activity logs that demonstrate how AI operates on a daily basis.

In this article, you’ll find practical information on: what changes for labels and watermarking of AI-created content, what metadata and logs are needed to demonstrate the origin and the audit trail, how to set up human monitoring and control, what the Italian AI Act provides, and what criteria to follow to choose the most suitable artificial intelligence models for your company.

The European AI Act starts from a simple idea: not all artificial intelligence technologies are the same. For example, a customer service chatbot cannot have the same rules as a system that evaluates CVs for hiring. This is why the regulation divides AI systems into categories of increasing risk. Each category has specific obligations and, above all, specific deadlines by which compliance is required. The law applies to those who use these systems in their companies, not just to technology providers, so you are responsible for complying with the rules.

Here is the complete calendar with the related areas of use:

The real challenge is not just meeting the AI Act deadlines, but building AI governance that strengthens customer trust. When a company must label its promotional images or website content as “artificially generated,” it risks conveying a perception of less authenticity and care. Those managing digital content and data have the opportunity to anticipate these scenarios by immediately organizing processes, responsibilities, and strategic controls that avoid trade-offs between compliance and corporate reputation, transforming regulatory obligations into competitive advantage.

The AI Act introduces transparency and marking requirements: outputs must be marked clearly, whether they are text, images, audio, or video.

Deepfakes (content where AI replaces one person’s face or voice with that of another) must be labeled and, where technically possible, given a digital watermark that machines can recognize. This helps track the content throughout its lifecycle, from creation to distribution.

To accompany the adoption, the Commission published the General-Purpose AI Code of Practice (10 July 2025) and Guidelines to clarify when and how obligations apply to GPAI providers (31 July 2025). The Code does not replace the law, but is a voluntary tool for demonstrating compliance; the guidelines specify its purpose and operational expectations.

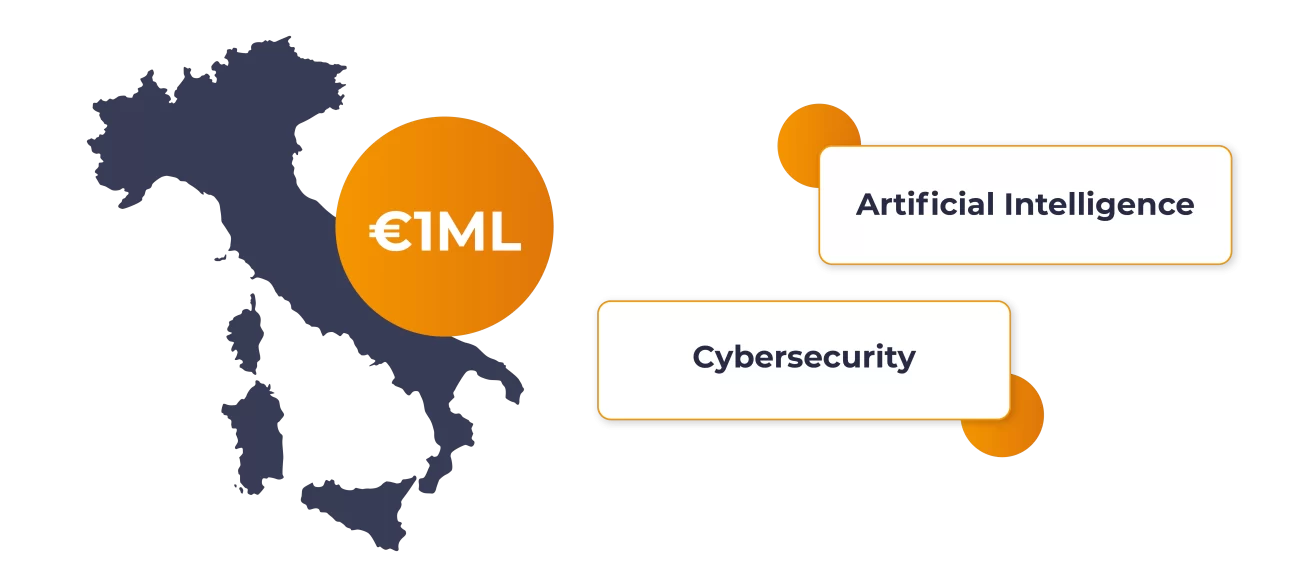

In September 2025 Italy approved a national law that supports the AI Act with coordination and supervision measures, tasking the Agency for Digital Italy (AgID) and the National Cybersecurity Agency (ACN) with implementing the legislation. A 1 billion euro fund is also planned to support AI and cybersecurity in the country. For businesses, this translates into clear contact persons, strengthened controls, and greater attention to transparency, traceability, and human supervision in sensitive sectors.

In the most sensitive use cases, the regulation requires documentation and event logging,that is, operational traces that an AI system records throughout its lifecycle, such as the start or end of an execution. Without homogeneous metadata on the origin, transformations, and versions of content, it becomes difficult to ensure accountability, investigate incidents, or trace the origin of data. Provenance is not an additional label, but a fundamental requirement: content must include the necessary information from the moment of creation, so that transparency is native and not added later.

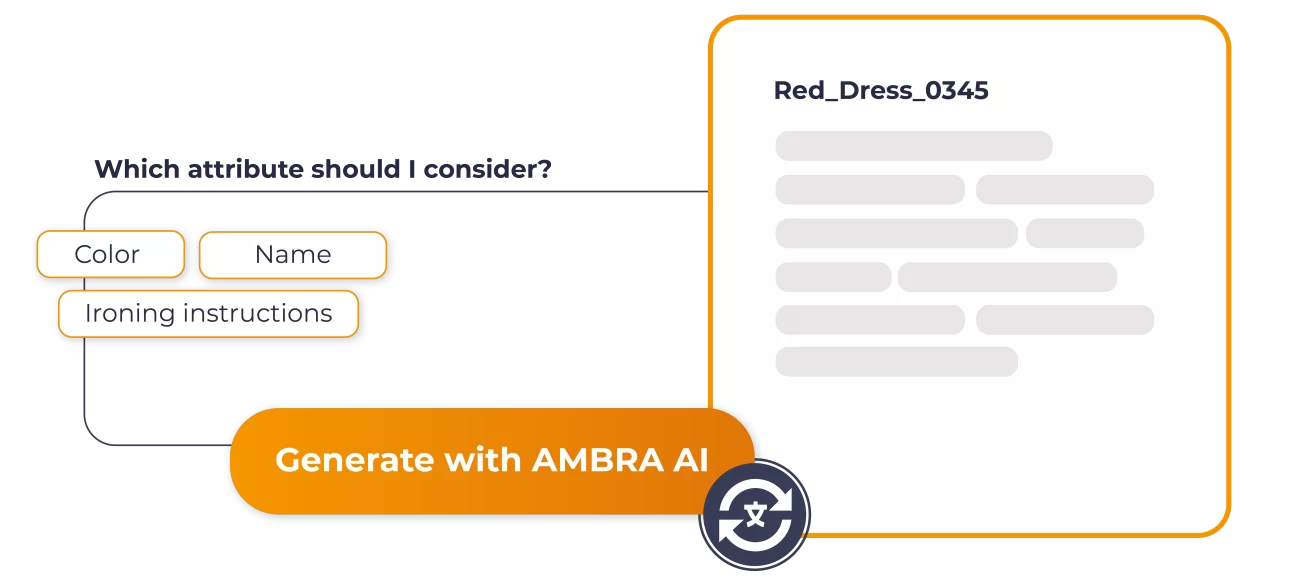

In this context, AMBRA AI, THRON Platform’s native artificial intelligence, helps simplify AI Act compliance and save time by automating repetitive steps from the first upload to the platform. THRON Platform provides the framework with fields and templates, consistent taxonomies, and centralized versioning. AMBRA AI works within this framework:

The result is less repetitive work, fewer errors, and faster publishing. Thus, each piece of content enters the platform with a consistent set of metadata, ready to accommodate labels and warnings for synthetic content and to be tracked throughout its lifecycle. This reduces manual work, makes content more discoverable, and facilitates compliance.

Metadata quality must be accompanied by operational traceability. The law requires event logs and continuous monitoring for high-risk systems; it also requires deployers to retain logs “for an appropriate period” for investigations, audits, and continuous improvement. In essence: without an verifiable history of executions, compliance remains only theoretical.

THRON’s new Automation Studio offers a single control center for designing, activating, and monitoring automations. It displays available automations with status and description, centralizes configuration, maintains execution history with searchable logs, respects role permissions, and sends error notifications, producing a readable audit trail for internal and external audits.

For those integrating general purpose models (GPAI), the European Commission has published a voluntary Code of Conduct and Guidelines that explain what to document and how to do it: transparency on training data, respect for copyright, management of systemic risks.

The Code also includes practical templates, such as the Model Documentation Form, which are useful for supplier evaluations. The GPAI rules came into effect on August 2, 2025: including these requirements in RFPs and contracts reduces uncertainty and compliance times.

What is the “EU AI Act” in a nutshell?

It is the European law that regulates AI by dividing it into risk levels: it provides transparency, controls for the most sensitive cases, and specific rules for GPAIs. It is already in force, with implementation staggered between 2025 and 2027.

When should I label content as AI-generated?

When output is synthetic (i.e. created directly by artificial intelligence) or manipulated and may appear to be real, a clear label is required and, where technically feasible, a watermark that can be read by software and platforms. Including these steps in the metadata reduces the risk of error.

If I use a general-purpose model (GPAI), what should I do today?

Ensure documentation, user instructions, copyright compliance, and publish a summary of training sources. If the model exceeds certain capacity thresholds (systemic risk), additional risk assessment and mitigation measures are required.

Are logs also mandatory for those who deploy the system?

Yes: deployers must retain logs generated by high-risk systems for an appropriate period of time, supporting traceability and investigations. Suppliers must set up systems that automatically record events.

What does the Italian AI Act mean for businesses?

It establishes coordinating authorities (AgID, ACN), introduces safeguards and penalties for abuses such as harmful deepfakes, and reinforces the requirement for transparency and human oversight. For businesses, this means traceable processes and clear points of contact.

What is the relationship between the AI Act and the “Italian AI Act”?

The European framework is directly applicable, while the Italian framework focuses on coordination, supervision and specific sanctions, aligning authorities and responsibilities. For companies, this changes the way controls are carried out and how they collaborate with regulators.

How do metadata and compliant automation help me?

Consistent metadata and a readable audit trail form the evidentiary basis for compliance; a console with logs and execution history makes processes demonstrable without slowing down operations.